Why you should care about K8s workload privileges

Introduction

Kubernetes offers a robust platform for deploying and managing containerized applications. However, it is crucial to prioritize security in order to safeguard other workloads and the infrastructure from potential attacks.

In today’s article we will review the possibilities that developers, operators and administrators have when configuring workloads on Kubernetes. We will review some of the default settings and see why you should not use them in production. And finally we will discuss the possibilities of auditing and enforcing the workload parameters.

Containerization

It is common knowledge that running workloads in containers, beside other benefits, brings isolation. The container runtime uses cgroups, Linux namespaces (not to be confused with K8s namespaces) and other means of separating the workload from the host operating system. But is it really strictly separated? In K8s that depends on how you configure the workload and what privileges it gets.

Workload settings

The smallest workload entity in Kubernetes is a pod. A pod is a group of one or more containers which share the network linux namespace and can also share other linux namespaces. The main container will be the actual application. If needed, additional containers can be added that can for example prepare the filesystem, fetch some init data etc.

To leverage the goods Kubernetes has, you will use a higher level construct, like deployment or stateful. But the security settings will always be set at the pod level (or container level as we will see).

When you issue the command <c-code>kubectl explain pod.spec<c-code>, among others you can find the following settings we are interested in:

There are also other parameters that might make the pod less isolated - like <c-code>volumes.hostPath<c-code>

By default none of these parameters is set, which is a good thing for those beginning with host*:

- <c-code>hostIPC<c-code> allows the pod processes to share the IPC namespace with the host and if enabled, the processes can interact with other processes running in the host namespace.

- <c-code>hostNetwork<c-code> will set the pod networking namespace to be shared with the host and the processes can interact with the host networking stack.

- <c-code>hostPID<c-code> will make the pod process share the PID namespace with the host and thus allow the container process to see other processes running on the host.

Enabling any of these three parameters would mean the containers in this pod are not strictly isolated from the host.

The <c-code>securityContext<c-code> property is not set by default either, but some of its default properties have consequences. Let’s see what the <c-code>securityContext<c-code> is capable of.

securityContext

The <c-code>securityContext<c-code> allows you to specify security related settings of the workload. It’s worth mentioning that the <c-code>securityContext<c-code> can be set on the pod level, but also on the individual container level. Some of the parameters can be specified on either of them, some on both and in that case the container level has precedence. The following table summarizes all of them for K8s API version 1.28.

As you can see, there are quite a number of <c-code>securityContext<c-code> parameters and explaining each one of them is beyond the aim of this article. The point is to emphasize the importance of setting the <c-code>securityContext<c-code> and let you explore all the available options. You can find them in the Kubernetes documentation.

So which parameters might be those that you want to check first?

runAsUser, runAsNonRoot, and runAsGroup

The parameter names give hints about their purpose. What may not be that obvious is their impact if not set. When building a container, there is the <c-code>USER<c-code> directive in the Dockerfile that dictates what user will be used to run the container. If not specified, the default is root. If you don’t specify <c-code>runAsUser<c-code> and <c-code>runAsGroup<c-code> for such an image, guess what user account it will run under.

While running the container as root may not be an immediate issue, combining this with other settings could cause a significant threat. Running the process under root account can lead to privilege escalation and container escape scenarios.

If your container doesn’t need to write to the filesystem, it might be sufficient to use just the <c-code>runAsNonRoot<c-code> property which will ensure the used image doesn’t run as root. If the image uses root user by default, the pod will fail. In that case you can use <c-code>runAsUser<c-code> instead and specify a different user ID. Note that some applications might have trouble running under a different ID than the developer used when building the image.

When the container needs to write to the filesystem, the <c-code>runAsGroup<c-code> property will come handy together with the pod level <c-code>fsGroup<c-code> securityContext property. In that case you will map a volume and K8s will make sure the proper permissions, based on the <c-code>fsGroup<c-code> setting, are set. Please note that not all persistent volume providers support this setting.

capabilities

<c-code>capabilities<c-code> property is a list of Linux capabilities the process can use to interact with the kernel. They can be added or dropped. By default, there is a set of capabilities that comes from the container runtime and it might already be overly permissive. It is a good practice to drop all (default) capabilities and add just those that the process really needs. This is a better approach than running the container as root or in privileged mode as we are minimizing the process permissions.

privileged and allowPrivilegeEscalation

<c-code>privileged<c-code> mode can be seen as equivalent to giving the process root rights. By default the container runtime is limiting the capabilities. If you use the privileged mode, these limits are dropped and the container can use any capability.

Setting <c-code>allowPrivilegeEscalation<c-code> will give the process the possibility to gain additional privileges beside those specified by <c-code>capabilities<c-code> (or those default coming from CRI). This setting is set to true if <c-code>privileged<c-code> mode is enabled or capability of <c-code>CAP_SYS_ADMIN<c-code> is added.

readOnlyRootFilesystem

If the container doesn’t need to write to the filesystem, this property should be set to true. Actually, it should always be set to true. If the container uses a filesystem for storing data, it must be known and the exact locations need to be defined. These locations can be mapped to persistent volumes (for persistent data) or to <c-code>emptyDir<c-code> or <c-code>ephemeral<c-code> types of volumes (for temporary data).

Hardened pod example

Adhering to the principle of least privilege is always the best approach. Every workload you run should have only those permission that it needs for its functionality. And most of the workloads don't need any special permissions so you should limit the privileges. Please see the following example for such definition:

The container running in this pod will not run as root, it won’t have any Linux capabilities and cannot gain any by escalating privileges, and also cannot write to the filesystem.

Of course there will be workloads that will need more than minimal permissions. These can be for example logging agents (having access to node filesystem) or ingress controllers (using nodePorts and thus using host networking). But they should be treated with care and given only exactly those permissions that they need.

Continue reading to find out how you can monitor what permissions your workloads use, how you can prevent them using unnecessary permissions and how you can set exceptions.

How to improve the security of your environment

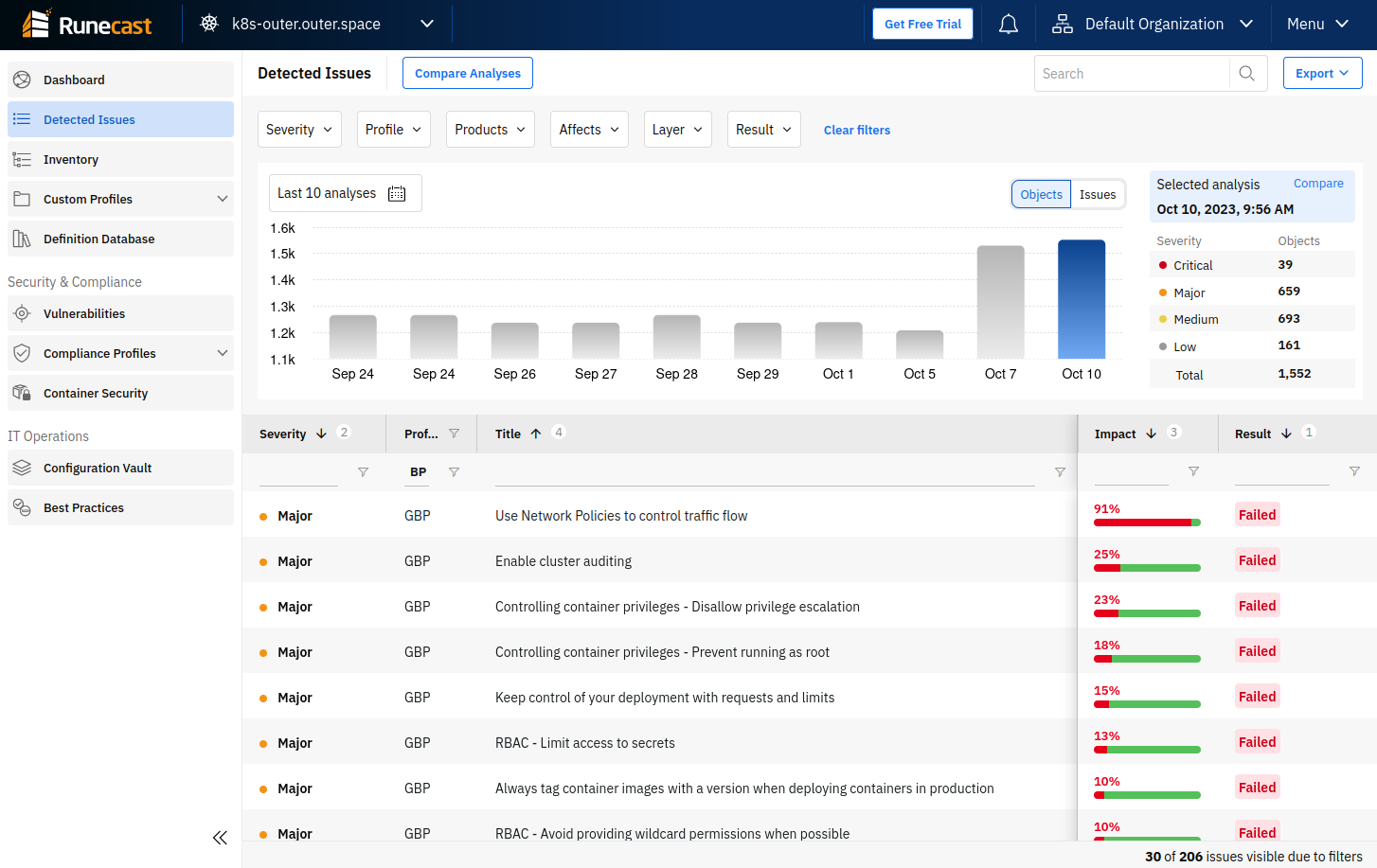

Now when we know what to focus on, how can we automatically monitor what is happening in the cluster? That’s where Runecast steps in. Runecast, beside cluster security, compliance benchmarks for K8s, and container image scanning, allows you to analyze the workload running in the cluster and watch its security settings. There are a number of open source tools that can help you with some of these tasks (like kube-bench or checkov). What they don’t offer and Runecast does is it brings you continuous analysis, historical evidence, lets you watch trends, and follow changes that might happen in the cluster.

We are monitoring what is happening in the cluster and can react by modifying the manifests or charts to adhere to our standards or to security benchmarks we follow. But what if we wanted to prevent the potentially dangerous settings on the workload? In that case we can use admission webhooks that will check the security settings of the workload before it enters the cluster.

Kubernetes introduced Pod Security Standards that define three isolation levels - Restricted, Baseline, and Privileged. The Baseline is already strict and prevents using host namespaces, privileged containers, hostPath volumes, and hostPorts etc. The

Combining the PSP’s with the Pod Security Admission controller (enabled by default since Kubernetes 1.25) it is pretty easy to control the workload settings. The only thing you need to do is properly label a namespace and start using this feature out-of-the-box. You can start with the warn or audit levels and when you confirm the required policy for each namespace, you can then use enforce that will ensure the select isolation level is enforced on the namespace.

If PSS/PSA are not flexible enough, you can go further and use 3rd party solutions that will give you much more flexibility.

Conclusion

We hope this article helped you to understand that monitoring and controlling the containerized workload privileges is a vital part during the process of hardening your clusters. Without limiting the workload privileges, a vulnerable workload can be seen as a paved path to further attacks on other workloads and underlying infrastructure.

As the Kubernetes world is changing even faster than before, you should automate the monitoring part with the help of solutions like Runecast. You might also use admission webhooks to audit and enforce the security policies you define for your clusters.

If you are interested in K8s security, watch our webinar recording where we discuss this topic more broadly and watch this space for any future updates.

Meet other Runecasters here:

Give Runecast a Test Drive

Deploy a free trial and let your discovery begin.